It's been a few weeks since our loss and I still feel devastated. It hurts to watch or even think about the game and so I decided to leave LA and reflect on the last split. I'll try to elaborate on aspects that the public may be more unfamiliar with. While I've been with the TSM organization since 2015, this was my first split as a coach. The position has a great deal of responsibility, primarily because I have complete control over the players in terms of strategic direction. Since my predecessors left me with no discernible structure, the hardest part was building it from scratch.

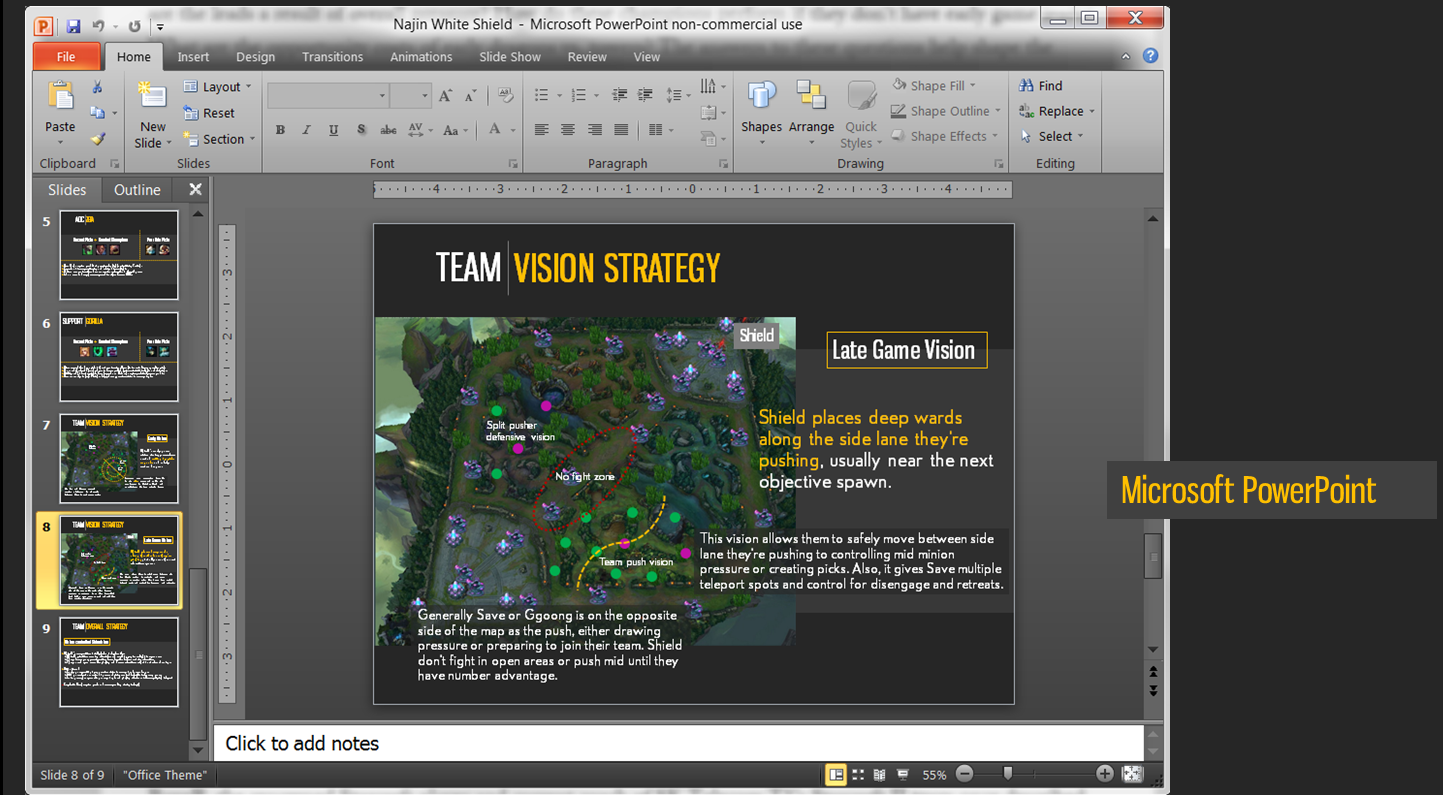

First, as a team we decided on a few game axioms upon which we built a simple system to evaluate different phases of the game. This gave the team a singular direction in the way we played, and gave a good structure to review and build our collective knowledge base. Next, as the person solely in charge of pick/ban, I had to develop a set of rules which took a lot of time. It started with simply building cohesive compositions, and every week added something new, adapting to patch changes, drafting to test matchups/strategic game plans, working around scrim opponents' specific tendencies, hiding a part of strategy or pick from every team, among the most relevant. Finally, there was the hierarchy of elements to work on: mechanics, game fundamentals, communication, higher-level plays/strategies, adaptations, etc. This 3-part system had to be constantly examined and optimized. For example, halfway through the split, I recognized that we had to establish a new "reset phase" to organize a subset of situations we were glossing over in reviews. After failures in the early game, we started actively devoting time to add to the playbook vision setups and plays from behind, something we were inexperienced at at the beginning of the split. All of these had to follow a certain hierarchy, depending on the weekend's opponent, patch changes, scrim partners, relevance of issue, and more.

I also had the pleasure of working with three extremely hard-working and talented people: Anand Agarwal, Brian Pressoir, and Matthew Schmeider. In addition to doing analysis and small projects for the team, one of their primary responsibilities was position coaching. After every scrim and team review, each player would meet with their position coach for the week to go over specific details of the game and personalized learning goals. We also brought each position coach for a week to work with us onsite, to help build relationships with the players and familiarize them with the details of working on staff where they helped me set the strategic direction for the week, work through prep and review, and pioneer objectives for the team to learn from. I want to thank all of them for their hard work and dedication throughout the split, their help was instrumental to our progress.

A large part in the success of Korean teams can be attributed to the systems that their organizations have built and have been refining for a long time. During the bootcamp and Worlds, I found myself questioning and changing core axioms of the systems we'd established earlier, things I could only learn through experience. I will never stop to wonder if we'd made some small changes in our approach, certain areas of focus, these small margins would've propelled us into the quarters or more. Despite a lot of factors that didn't go our way, I still felt each loss weigh heavily on my shoulders. These lessons are much harder to learn in NA, mostly due to lack of experienced coaching philosophies. In the same way that players need skilled opponents to practice against in order to improve, coaches, especially inexperienced ones, need different perspectives to learn from. This split, only Tony and Reapered were systematic in the way they approached (strategic) coaching, evident from their pick/bans and what strategies they practiced in scrims, but even then I could see the deficiencies in their team's style, as I'm sure they could see in mine. The best Korean teams are disciplined in the fundamentals of the game and adapt with a ruthless efficiency, and western organizations need to build and refine their systems to compete with them by allowing the coaching staff to gain experience and helping with professional development.

As disappointed as I am by our World's performance, I am equally proud of our accomplishments during the summer. We shared the same goal with a passion that resonated in every practice and stage performance, and the pursuit of excellence felt more important than the result each weekend. This ethos will be the ingrained as a benchmark upon which TSM will continue to operate. There is nothing more inspiring than working with a group of people willing to dedicate every minute of the day to the game. I'm glad our fans got to take this journey with us (thanks Max), and hope they were proud, connecting with the story of this team and how much it has matured over the course of the year, striving to do something extraordinary.

I love my team.